Expanding Brain Face Filter

When we first discovered Instagram face filters, we were somewhat late to the party, but that didn’t curb our sky-rocketing fascination and enthusiasm. After the obvious “puking type” and “mapping cucumbers onto our eyes” experiments, new ideas for filters kept flooding in, and with each new concept, it seemed that another, even better one was just around the corner.

To launch our Dinamo face filter series, we transformed the ubiquitous expanding brain meme into an AR face filter. In the original meme—which many will remember began circulating on Reddit in 2017—a sequence of four images depicts various brain sizes, communicating the idea of expanding mental enlightenment. The expanding brain usually implies intellectual superiority, but in meme form, it often suggests the very opposite.

For our face filter, we modelled an entire 3D character and its expanding brain, which maps onto an Instagram user’s upper body and face. The user can then control how quickly their brain expands by tapping or holding their finger to the screen. Below, our face filter collaborator and developer Moritz Tontsch explains the process behind making Expanding Brain.

Moritz Tontsch on Developing the Expanding Brain Face Filter

Face filters add a virtual layer on top of a camera’s filmed input. With face tracking technology, it’s possible to track facial features in front of a camera and to simulate a 3D scene. This enables the possibility of virtually extending the physical body using 3D elements, images, and visual effects—and it allows you to alienate the face from its natural form.

In this project, we tried to translate the expanding brain meme into a face filter while also creating a fun and shareable experience. We wanted the filter to be conceptually and graphically as close as possible to the original meme. We did make some small changes though, in order to enhance the usability of the filter. For example, we adjusted the first step, where the original meme displays a skeleton; for our filter, we use the user’s face as the first step, with the brain expanding out from there.

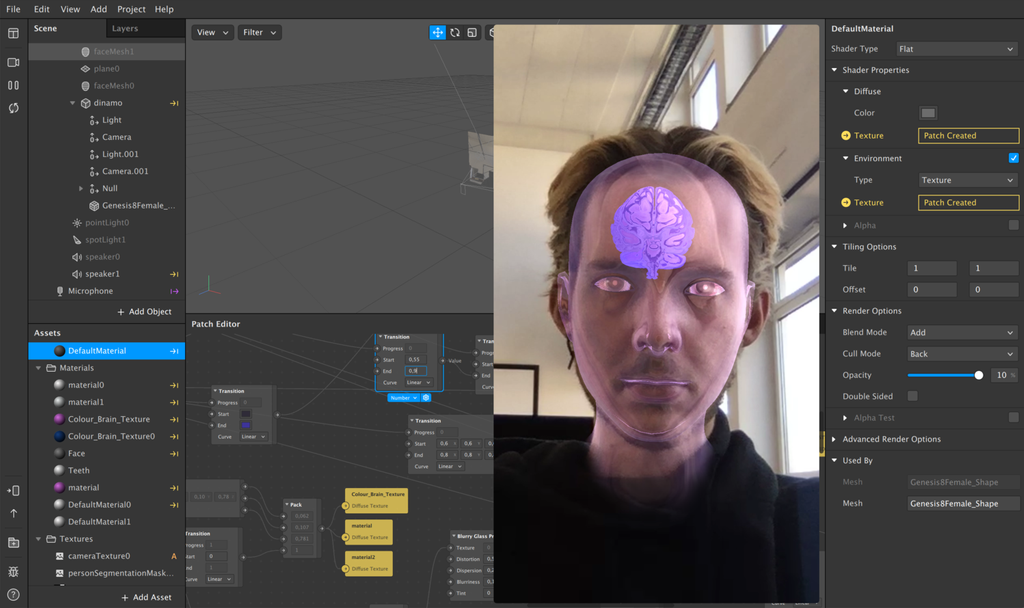

The expanding brain meme displays the human body in an abstracted form, without any personal facial features. Its body can be distinguished as biologically male, as its body shows features commonly associated with the male sex. Our face filter needed to be inclusive of a range of genders, so a 3D model with more androgynous facial features was chosen. The style of the body was created by using the camera input as an environment texture, and taking this into a ‘glass shader.’ The white glow in the last phase is created in the same way.

The meme’s background is relatively plain and monotone in color. For the face filter, we integrated these colors—as well as the abstract human model—with the camera input, in order to make the filter more in sync with the situation being captured. In the first step, the physical world is completely visible. The augmented, virtual world dominates the scene as the brain expansion climaxes in the last steps of the filter—by the end, the real environment is no longer visible.

The different states depicted in the expanding brain memes were translated into different steps for the filter, which animate and swell together when the screen is tapped. The different animations were built with a system of patch groups, which allow the animated scales, color, and transparency values of the rendered model to seamlessly transition. The abstract model, which the user eventually ‘transforms’ into, was prepared and rigged in Blender. The implementation, scene building, and interaction of the face filter was done in Spark AR Studio.

Credits:

Original meme: janskishimanski (supposedly)

Face Filter Concept: Dinamo

Development: Moritz Tontsch